🎯 Don’t Ask If a GPU Is Available — Ask Which One You Need (and Where You Can Get It)

As demand for AI, deep learning, and high-performance computing skyrockets, one question keeps slowing teams down:

“Is the GPU I need available right now from my usual cloud provider?”

Too often, the answer is “no.” Whether due to capacity limits, stock shortages, or regional quotas, access to GPUs is becoming a bottleneck.

But what if you changed your approach entirely?

Instead of depending on a single provider, what if you could ask:

➡️ “What GPU do I need?”

➡️ “Who has it?”

➡️ “At what price?”

➡️ “Can I deploy there — now?”

That's the promise of a smart multi-cloud GPU strategy — and exactly what LayerOps delivers.

🚨 The Real Problem: GPU Shortages in the Cloud

The rise of AI has pushed cloud GPU demand to its limits. You've probably seen these messages:

“Quota exceeded”

“No capacity in selected zone”

“Provisioning failed: resource unavailable”

These are more than technical hiccups — they delay product launches, eat up engineering time, and reduce your team’s agility.

💡 The Better Question: What GPU Do You Actually Need?

Instead of waiting and hoping your usual provider has that NVIDIA A100 or L4 available, start by identifying your technical need, then check:

- Which providers currently offer that GPU model?

- What’s the price per hour (or second)?

- What regions are available?

- Can you switch providers easily if one runs out?

Spoiler: if you can’t answer that last one — you’re locked in.

🔄 Enter LayerOps: Multi-Cloud GPU Without the Lock-In

LayerOps is a European CaaS (Container-as-a-Service) platform that makes multi-cloud GPU usage as easy as deploying a single container:

✅ Automatically deploy your services across multiple European cloud providers

✅ See and compare GPU models (A100, L4, H100, T4, etc.) across clouds

✅ Instantly launch services on the provider with available stock

✅ Scale to zero when idle, and optimize your GPU cost

✅ Re-deploy on another cloud in seconds if stock runs out or pricing changes

🚀 Result? No more waiting for capacity. Your AI services stay online, scalable, and portable.

🌍 Souverain, Smart, Scalable

LayerOps only integrates with European and sovereign cloud providers — like OVHcloud, Scaleway, Exoscale, Outscale, and Infomaniak. That means:

- Your data stays protected (no Cloud Act)

- Your infrastructure is resilient and flexible

- You gain full independence from hyperscalers

- And your AI workloads are optimized and scalable on demand

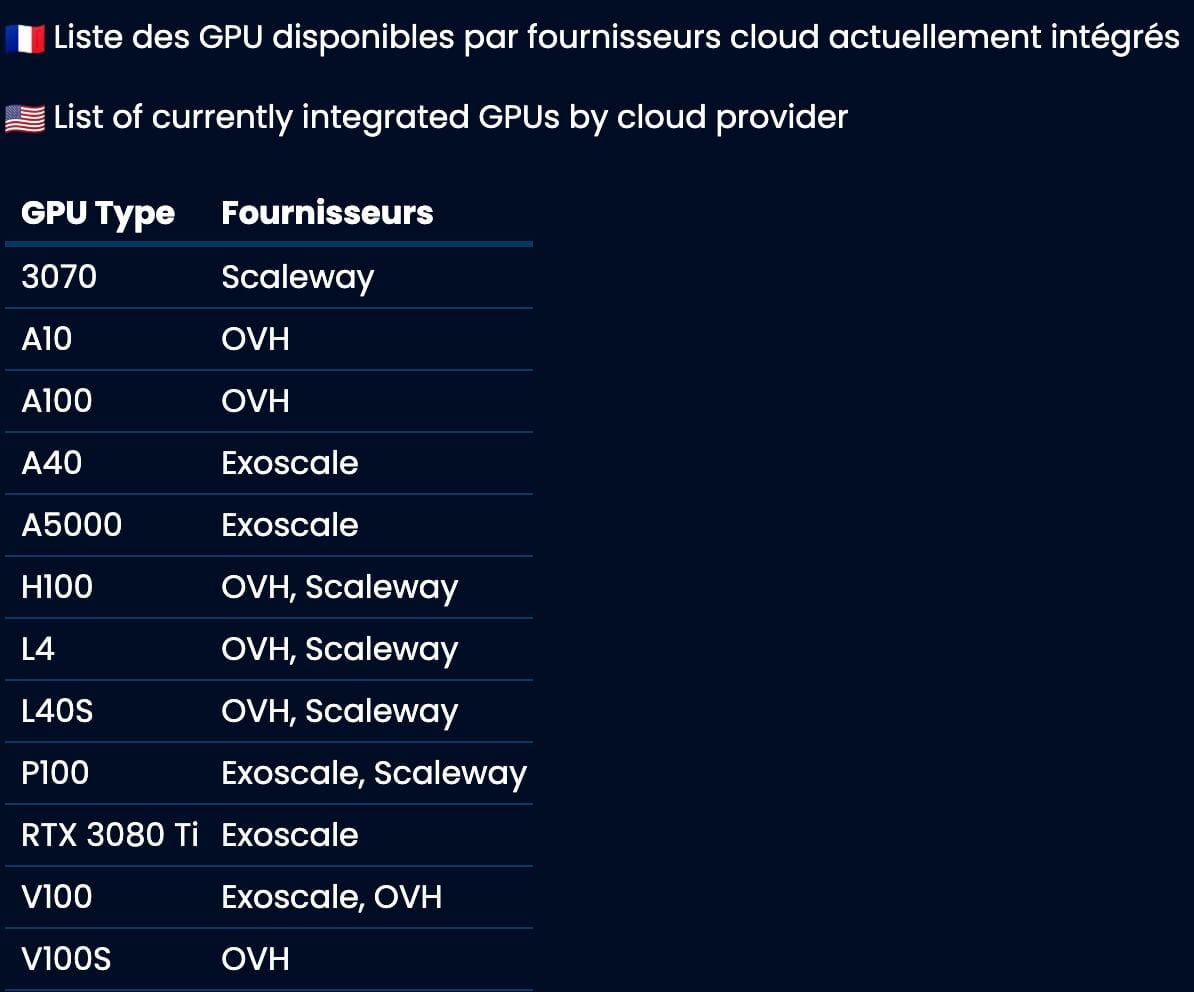

📊 Updated List of Available GPU Resources

Want to see what’s available right now? We maintain a public list of all GPU types by cloud provider:

👉 GPU availability by provider

🎁 Want to Try It Out?

We offer free cloud credits with several EU cloud providers — ideal for testing multi-cloud GPU strategies without upfront costs. Reach out and let’s get you started.

Final Thought

The future of cloud isn’t one provider — it’s multi-cloud intelligence.

The future of GPU access isn’t “hope it’s in stock” — it’s “launch it wherever it’s ready.”

With LayerOps, your infrastructure finally adapts to your needs — not the other way around.

👉 Explore more: https://www.layerops.io

#MultiCloud #GPU #CloudComputing #AIInfrastructure #GPUAvailability #Portability #LayerOps #HybridCloud #CloudSovereignty #EuropeanCloud

Worried about the American cloud?

LayerOps offers you a sovereign, multi-vendor, secure European alternative.